POSITS

Unums and Posits

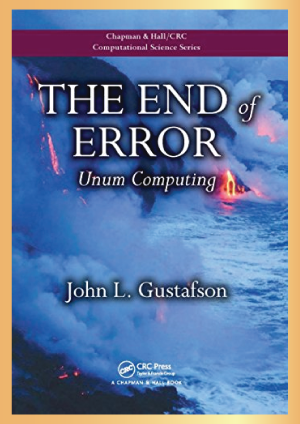

Dr. John Gustafson – a renowned American Mathematician and very first Gorden Bell awardee in 1988, introduced unums (universal numbers) as a different way to represent real numbers using a finite number of bits, an alternative to IEEE floating point. See, for example, his 2015 book The End of Error. Posits are a hardware-friendly version of unums.

A conventional floating point number (IEEE 754) has a sign bit, a set of bits to represent the exponent, and a set of bits called the significand (formerly called the mantissa). For a given size number, the lengths of the various parts are fixed. A 64-bit floating point number, for example, has 1 sign bit, 11 exponent bits, and 52 bits for the significand.

A posit adds an additional category of bits, known as the regime. A posit has four parts

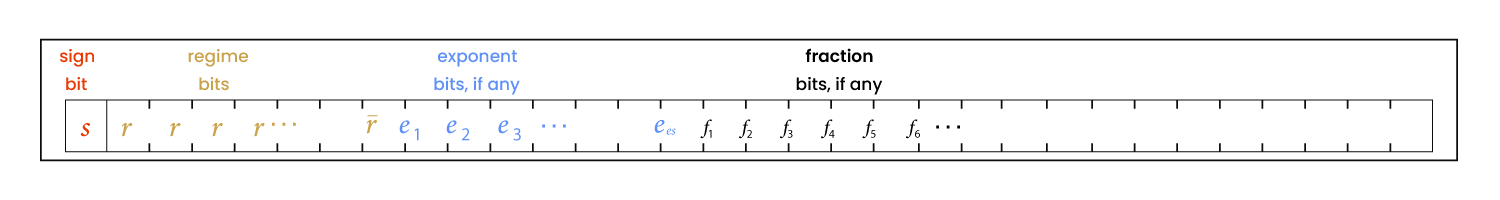

While an IEEE floating point number has a sign bit, exponent, and significand, the latter corresponding to the fraction part of a Posit. Unlike IEEE numbers, the exponent and fraction parts of a posit do not have fixed length. The sign and regime bits have first priority. Next, the remaining bits, if any, go into the exponent. If there are still bits left after the exponent, the rest go into the fraction.

Bit pattern of a posit

To understand posits in more detail, and why they have certain advantages over conventional floating point numbers, we need to unpack their bit representation. A posit number type is specified by two numbers: the total number of bits n, and the maximum number of bits devoted to the exponent, es. (Yes, it’s a little odd to use a two-letter variable name, but that’s conventional in this context.) Together we say we have a posit<n, es> number.

Sign bit

As with an IEEE floating point number, the first bit of a posit is the sign bit.

If the sign bit is 1, representing a negative number, take the two’s complement of the rest of the bits before unpacking the regime, exponent, and fraction bits.

Read More...

Regime bits

After the sign bit come the regime bits. The number of regime bits is variable.

There could be anywhere from 1 to n-1 regime bits. How do you know when the regime bits stop? When a run of identical bits ends, either because you run out of bits or because you run into an opposite bit

If the first bit after the sign bit is a 0, then the regime bits continue until you run out of bits or encounter a 1. Similarly, if the first bit after the sign bit is a 1, the regime bits continue until you run out of bits or encounter a 0. The bit that indicates the end of a run is not included in the regime; the regime is a string of all 0’s or all 1’s.

Read More...

Exponent bits

The sign bit and regime bits get first priority. If there are any bits left, the exponent bits are next in line.

There may be no exponent bits.The maximum number of exponent bits is specified by the number es. If there are at least es bits after the sign bit, regime bits, and the regime terminating bit, the next es bits belong to the exponent. If there are fewer than es bits left, what bits remain belong to the exponent.

Read More...

Fraction bits

If there are any bits left after the sign bit, regime bits, regime terminating bit

the exponent bits, they all belong to the fraction.

Read More...

Interpreting the components of a posit

Next we look at how the components described above represent a real number.

Let b be the sign bit in a posit. The sign s of the number represented by the bit pattern is positive if this bit is 0 and negative otherwise.

The used u of the posit is determined by es, the maximum exponent size.

The exponent e is simply the exponent bits interpreted as an unsigned integer.

The fraction f is 1 + the fraction bits interpreted as following a binary point. For example, if the fraction bits are 10011, then f = 1.10011 in binary.

Putting it all together, the value of the posit number is the product of the contributions from the sign bit, regime bits, exponent bits (if any), and fraction bits (if any).

Read More...

Exceptional posits

There are two exceptional posits, both with all zeros after the sign bit. A string of n 0’s represents the number zero, and a 1 followed by n-1 0’s represents ±∞.

There’s only one zero for posit numbers, unlike IEEE floats that have two kinds of zero, one positive and one negative.

There’s also only one infinite posit number. For that reason, you could say that posits represent projective real numbers rather than extended real numbers. IEEE floats have two kinds of infinities, positive and negative, as well as several kinds of non-numbers. Posits have only one entity that does not correspond to a real number, and that is ±∞.

Dynamic range and precision

The dynamic range and precision of a posit number depend on the value of es. The larger es is, the larger the contribution of the regime and exponent bits will be, and so the larger range of values one can represent. So increasing es increases dynamic range. Dynamic range, measured in decades, is the log base 10 of the ratio between the largest and smallest representable positive values.

However, increasing es means decreasing the number of bits available to the fraction, and so decreases precision. One of the benefits of posit numbers is this ability to pick es to adjust the trade-off between dynamic range and precision to meet your needs.

The largest representable finite posit is labeled maxpos. This value occurs when k is as large as possible, i.e. when all the bits after the sign bit are 1’s. In this case k = n-2. So maxpos equals

The smallest representable positive number, minpos, occurs when k is as negative as possible, i.e. when the largest possible number of bits after the sign bit are 0’s. They can’t all be zeros or else we have the representation for the number 0, so there must be a 1 on the end. In this case m = n-2 and k = 2-n. The dynamic range is given by the log base 10 of the ratio between maxpos and minpos.

For example, 16-bit posit with es = 1 has a dynamic range of 17 decades, whereas a 16-bit IEEE floating point number has a dynamic range of 12 decades. The former has a fraction of 12 bits for numbers near 1, while the latter has a significand of 10 bits. So a posit<16,1> number has both a greater dynamic range and greater precision (near 1) than its IEEE counterpart.

Note that the precision of a posit number depends on its size. This is the sense in which posits have tapered precision. Numbers near 1 have more precision, while extremely big numbers and extremely small numbers have less. This is often what you want. Typically the vast majority of numbers in a computation are roughly on the order of 1, while with the largest and smallest numbers, you mostly want them to not overflow or underflow

QUIRE – Computation results without intermediate rounding or accuracy loss. QUIRE is an extended functionality that has been implemented along with POSITs to enhance the accuracy of the POSIT Arithmetic output. It is basically an 512 bit accumulator that helps the iterative computation to almost never needing rounding of the computation. This large accumulator thus eliminates the need for rounding at every computation and the result being used as input operands for the next arithmetic function. For eg: in matrix multiplication, the accuracy error is reduced up to 4 orders of magnitude. Some experiments that have been conducted revel that the Accuracy and timing performance of posit numbers and IEEE 754 floats are compared using General Matrix Multiplication (GEMM) and max-pooling benchmarks. Results show that 32-bit posits can be up to 4 orders of magnitude more accurate than 32-bit floats thanks to the quire register. Furthermore, this improvement does not imply a trade-off in execution time, as they can perform as fast as 32-bit floats, and thus execute faster than 64bit floats.

Calligo has enabled native posit and quire support in hardware by leveraging a high-performance RISC-V core.

Read More...